| |||||

| |||||

|

Michael Vaughan’s August 7 Update

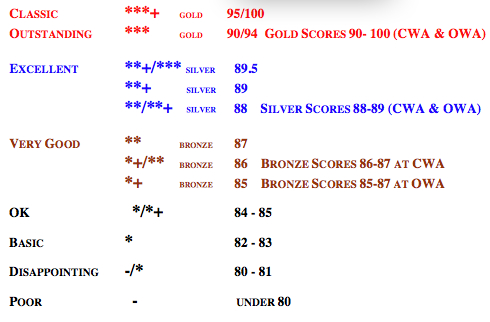

As for my quest for the perfect scoring system, it still eludes me. While I personally use numbers, for the past 20 years I have utilized the pre- Robert Parker star system. Why? Because published numbers have now become totally ubiquitous – almost to the point of being meaningless. Anyone can give a wine a number – any number – without really understanding its implications. The reason more and more writers rate out of 100-points is that consumers find them extremely easy to use. Also, you really don’t have to be a wine expert to understand them. Just like the ever-increasing number of wine awards, we are now drowning in a sea of numbers. In some instances, they’re simply based on the whim or mood of the taster. While each writer is capable of assigning a score, what’s lacking is rigour, uniformity and consistency. Now this may not matter to casual drinkers, who don’t care how yesterday’s 87 (a bronze medal winner) stacks up against today’s 88 (a silver medal winner). After all, they’re just trying to track down something to enjoy. Of course, some writers use numbers in a totally meaningless, or worse yet, commercial manner. One really has to have an extremely broad tasting base supported by extensive knowledge to be a critic. Simply liking or disliking a wine will not do. If tasting a Pinot Noir, you have to know what Pinot Noir should taste like from each respective region. How else can you score the wine? You can’t, for instance, expect an Australian Pinot Noir to necessarily taste like a classic French Burgundy or vice versa. Thankfully we do have a hierarchy of accreditation, from Master of Wine (MW) to various Sommelier degrees. Of course, even within this group there are the super tasters and the rest. Of course, you can be a great writer without being a great taster and vice versa. Nevertheless it’s a shame that writers are not having their palates rigorously tested for their ability to taste. The essence of my personal dilemma relating to the 100-point system is that we are being increasingly coerced into using it. In fact, the 100-points don’t exist. It really is a 20-point system from 80-100 points, or at the most 25-points, where a really terrible wine will receive 75-points. As a guide, here is a comparison of my current star system, scores out of 100-points and gold-silver-bronze awards from two major Canadian competitions, including Tony Aspler’s Ontario Wine Awards (OWA) and Wine Access Canadian Wine Awards (CWA). These are the scoring systems that the judges have to work with.

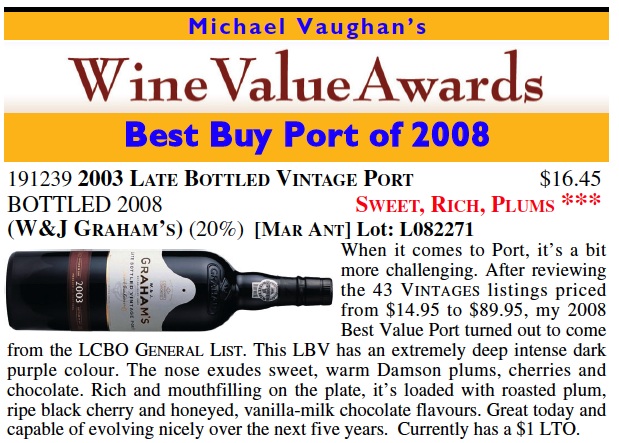

Click here to see an enlarged version It’s hard to believe that the difference between a bronze and a gold can be as small as just 4-points in the 100-point system. In my grading system, bronze-award-winner rated Very Good with 87-points gets ** while a gold-award-winner rated as Outstanding with 90-points gets***. While there is only a 4-point spread, in terms of stars, the difference from** to*** seems to be much more significant. Readers can really see the difference in stars, while it is very easy to forget that the 86-87 is bronze, 88-89 is silver and 90 plus is gold. At the All-Canadian Wine Championships (ACWC) it gets worse. This year a total of 1071 wines (the largest in any Canadian competition) were judged using the 100-point system. The final score is an average of the aggregate judges scores and the Best of Category medal awarded to the single highest rated wine. Then it falls apart. The awards have nothing to do with scores! Here are the ACWC rules: Gold awards of merit will be awarded to those wines scoring in the top 10 percentile This means that the ACWC medals have no necessary numeric meaning whatsoever. If, for instance, the entire flight averaged 85 to 87 (bronze), the first 10% would still get gold medals and the next 10% silver. To my mind, this is both intellectually shoddy and deceptive. It tends to confirm allegations that some wine competitions may have more to do with making profits for competition organizers than anything else. It’s also unsettling that competition organizers require significant submission fees and, in some instances, insist on post-award winning advertising. Is it possible that wine writers, who act as judges and get paid as much as $1,000 plus all expenses, will be openly critical about failures in the judging process? Unlike wine competition "winners", evaluations by a knowledgeable critic (be it Robert Parker, Jancis Robinson, etc.) will be true to a set of standards and experience, subject to that individual’s intrinsic ability to taste on a consistent basis. Last year I set up the Wine Value Awards – on a no-fee basis. Perhaps you might want to read my prelude from December 24th feature (click here) on some of my top wines for 2008. My top value Port for 2008 is now available on a $1 LTO - click on the image below A New Wine Value Award Winner

It is currently on a $1 lto meaning that it will set you back only $7.95 a bottle (until August 16th). For those of you who enjoyed my highly recommended Vintages release best buy from South Africa – Café Culture Pinotage, I think that your are going to enjoy this **/**+ award winner Obikwa 2008 Shiraz (527499). Here’s a very juicy, albeit somewhat lighter, coffee-mocha-tinged, very slightly smoky, fruity berry-cherry flavoured crowd-pleaser. It is terrific with BBQ ribs, hamburgers, almost anything off the grill. It comes with a convenient screwcap closure and is widely available on the general list (also in magnums).

|